Python 使用朴素贝叶斯方法对文本文档进行分类

朴素贝叶斯算法是一个强大的工具,可以用来对文档或文本中的单词进行不同类别的分类。例如,如果一个文档中有”潮湿”、”下雨”或”多云”等词,我们可以使用贝叶斯算法来判断该文档是否属于”晴天”或”雨天”这样的分类。

需要注意的是,朴素贝叶斯算法基于两个待比较文档的单词是相互独立的假设。然而,考虑到语言的细微差别,这个假设很少是真实的。这就是为什么该算法的名称中带有”朴素”一词的原因,但它的表现仍然足够好。

步骤

- 步骤1 - 输入文档数量、文本字符串和对应类别的信息。将文本和关键词分割,并使用列表对其进行处理,输入需要分类的字符串/文本。

-

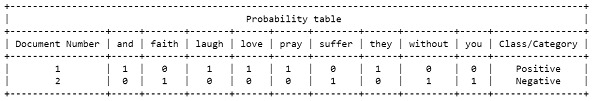

步骤2 - 创建一个列表,其中将存储每个文档中所有关键词的频率。使用pretty table库以表格形式打印出来。根据需要命名表头。

-

步骤3 - 计算每个类别(正面和负面)的总单词数量和文档数量。

-

步骤4 - 计算每个单词的概率,并四舍五入保留四位小数。

-

步骤5 - 使用贝叶斯公式计算类别概率,并四舍五入保留八位小数。

-

步骤6 - 使用贝叶斯公式计算类别概率,并四舍五入保留八位小数。

-

步骤7 - 针对负面类别重复上述两个步骤。

-

步骤8 - 比较两个类别的概率结果并打印结果。

示例

在这个示例中,为了简单和易于理解,我们将只采用两个包含一句话的文档,并对与这两个句子相似的字符串进行朴素贝叶斯分类。每个文档都有一个类别,我们的目标是判断待测试的字符串属于哪个类别。

#Step 1 - Input the required data and split the text and keywords

total_documents = 2

text_list = ["they love laugh and pray", "without faith you suffer"]

category_list = ["Positive", "Negative"]

doc_class = []

i = 0

keywords = []

while not i == total_documents:

doc_class.append([])

text = text_list[i]

category = category_list[i]

doc_class[i].append(text.split())

doc_class[i].append(category)

keywords.extend(text.split())

i = i+1

keywords = set(keywords)

keywords = list(keywords)

keywords.sort()

to_find = "suffer without love laugh and pray"

#step 2 - make frequency table for keywords and print the table

probability_table = []

for i in range(total_documents):

probability_table.append([])

for j in keywords:

probability_table[i].append(0)

doc_id = 1

for i in range(total_documents):

for k in range(len(keywords)):

if keywords[k] in doc_class[i][0]:

probability_table[i][k] += doc_class[i][0].count(keywords[k])

print('\n')

import prettytable

keywords.insert(0, 'Document Number')

keywords.append("Class/Category")

Prob_Table = prettytable.PrettyTable()

Prob_Table.field_names = keywords

Prob_Table.title = 'Probability table'

x=0

for i in probability_table:

i.insert(0,x+1)

i.append(doc_class[x][1])

Prob_Table.add_row(i)

x=x+1

print(Prob_Table)

print('\n')

for i in probability_table:

i.pop(0)

#step 3 - count the words and documents based on categories

totalpluswords=0

totalnegwords=0

totalplus=0

totalneg=0

vocabulary=len(keywords)-2

for i in probability_table:

if i[len(i)-1]=="+":

totalplus+=1

totalpluswords+=sum(i[0:len(i)-1])

else:

totalneg+=1

totalnegwords+=sum(i[0:len(i)-1])

keywords.pop(0)

keywords.pop(len(keywords)-1)

#step - 4 Find probability of each word for positive class

temp=[]

for i in to_find:

count=0

x=keywords.index(i)

for j in probability_table:

if j[len(j)-1]=="Positive":

count=count+j[x]

temp.append(count)

count=0

for i in range(len(temp)):

temp[i]=format((temp[i]+1)/(vocabulary+totalpluswords),".4f")

print()

temp=[float(f) for f in temp]

print("Probabilities of each word in the 'Positive' category are: ")

h=0

for i in to_find:

print(f"P({i}/+) = {temp[h]}")

h=h+1

print()

#step - 5 Find probability of class using Bayes formula

prob_pos=float(format((totalplus)/(totalplus+totalneg),".8f"))

for i in temp:

prob_pos=prob_pos*i

prob_pos=format(prob_pos,".8f")

print("Probability of text in 'Positive' class is :",prob_pos)

print()

#step - 6 Repeat above two steps for the negative class

temp=[]

for i in to_find:

count=0

x=keywords.index(i)

for j in probability_table:

if j[len(j)-1]=="Negative":

count=count+j[x]

temp.append(count)

count=0

for i in range(len(temp)):

temp[i]=format((temp[i]+1)/(vocabulary+totalnegwords),".4f")

print()

temp=[float(f) for f in temp]

print("Probabilities of each word in the 'Negative' category are: ")

h=0

for i in to_find:

print(f"P({i}/-) = {temp[h]}")

h=h+1

print()

prob_neg=float(format((totalneg)/(totalplus+totalneg),".8f"))

for i in temp:

prob_neg=prob_neg*i

prob_neg=format(prob_neg,".8f")

print("Probability of text in 'Negative' class is :",prob_neg)

print('\n')

#step - 7 Compare the probabilities and print the result

if prob_pos>prob_neg:

print(f"By Naive Bayes Classification, we can conclude that the given belongs to 'Positive' class with the probability {prob_pos}")

else:

print(f"By Naive Bayes Classification, we can conclude that the given belongs to 'Negative' class with the probability {prob_neg}")

print('\n')

我们对每个文档进行迭代,将关键词存储在一个单独的列表中。通过对文档进行迭代,我们存储关键词的频率并绘制一个概率表。该代码计算文档中正面和负面单词的数量,并确定唯一关键词的大小。

然后,我们计算正面类别中每个关键词的概率,并对输入文本中的关键词进行迭代,计算在正面类别中的出现次数。结果概率然后存储在一个新的列表中。然后,我们使用贝叶斯公式计算属于正面类别的输入文本的概率。类似地,我们计算负面类别中每个关键词的概率并存储它们。然后我们比较两个类别的概率,并确定概率较高的类别。

输出

Probabilities of each word in the 'Positive' category are:

P(suffer/+) = 0.1111

P(without/+) = 0.1111

P(love/+) = 0.2222

P(laugh/+) = 0.2222

P(and/+) = 0.2222

P(pray/+) = 0.2222

Probability of text in 'Positive' class is : 0.00000000

Probabilities of each word in the 'Negative' category are:

P(suffer/-) = 0.1111

P(without/-) = 0.1111

P(love/-) = 0.0556

P(laugh/-) = 0.0556

P(and/-) = 0.0556

P(pray/-) = 0.0556

Probability of text in 'Negative' class is : 0.00000012

By Naive Bayes Classification, we can conclude that the given belongs to 'Negative' class with the probability 0.00000012

结论

朴素贝叶斯算法是一种在没有大量训练的情况下运作非常出色的算法。然而,对于那些不在文档中出现的新数据,该算法可能会给出荒谬的结果或者错误。尽管如此,该算法在实时预测和基于过滤功能的应用中具有重要的作用。其他类似的分类算法包括逻辑回归、决策树和随机森林等。

极客笔记

极客笔记