buf在framework和hal的交互,Camera framework和hal层交互的“那层”,主要的文件是Camera3Device.cpp。包含Camera3Device类以及一个内部thread。

加入知识星球与更多Camera同学交流

- 星球名称:深入浅出Android Camera

- 星球ID: 17296815

简述及思考

- buf的结构?

- fw向hal怎么发送?

Framework向hal层发送capture request过程中会伴随buf的传递,我们理解这个过程有助于理解buf的传递。

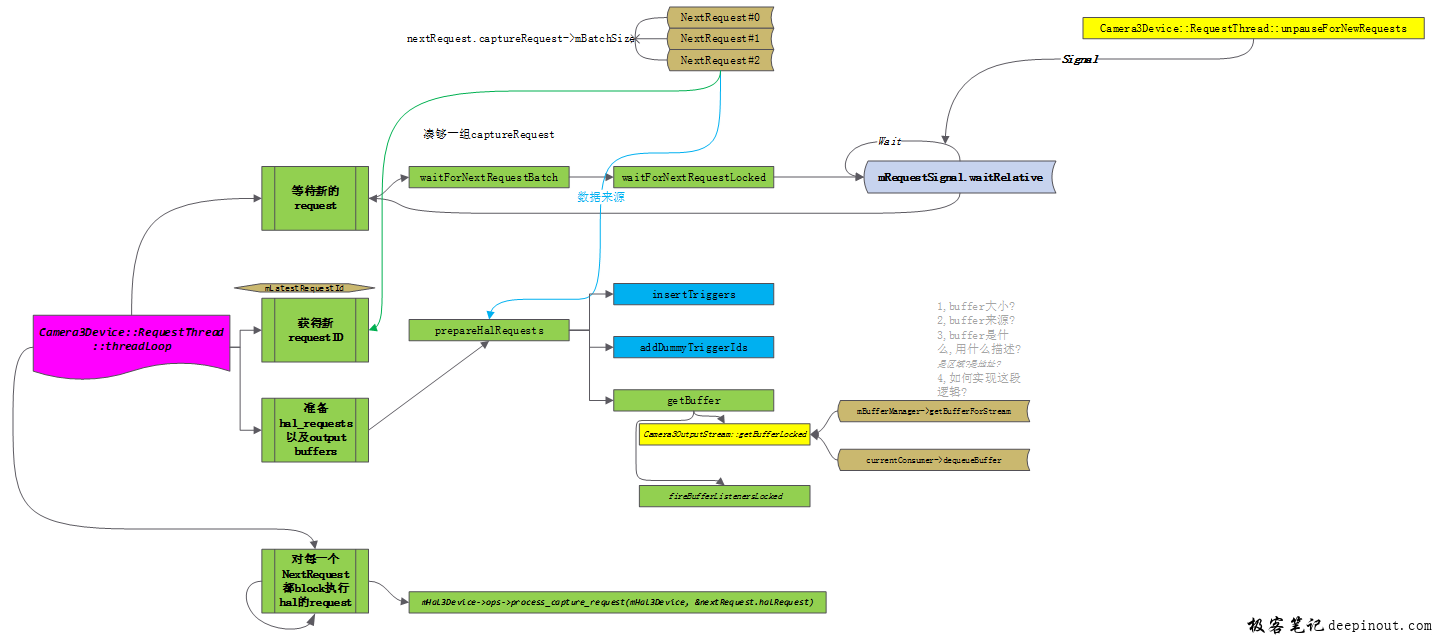

整体上看,framework和hal交互的核心是Camera3Device类的RequestThread。它主要做了四部分工作:

- 等待新的request产生,这个会sleep等一个包含pthread_wait的condition类。

- 有req后,取出对应的req id并准备hal层需要的

camera3_capture_request_t*结构的hal_request。 - 对每一个request都阻塞调用hal的

process_capture_request函数。

typedef struct camera3_capture_request {

//同camera3_capture_result

uint32_t frame_number;

//1,request用到的processing 参数.

//2,传入NULL,表示用上一次的配置.

//3,但是第一次不能传入NULL.

const camera_metadata_t *settings;

//1,input buffer

camera3_stream_buffer_t *input_buffer;

//1,output buffer 个数,至少为1.

uint32_t num_output_buffers;

//1,hal层将要填充的buf空间.

//2,hal层一定要拿到fenses之后才能向这块空间"写"操作.

const camera3_stream_buffer_t *output_buffers;

} camera3_capture_request_t;- 其中又个问题,framework向hal发送的数据结构?

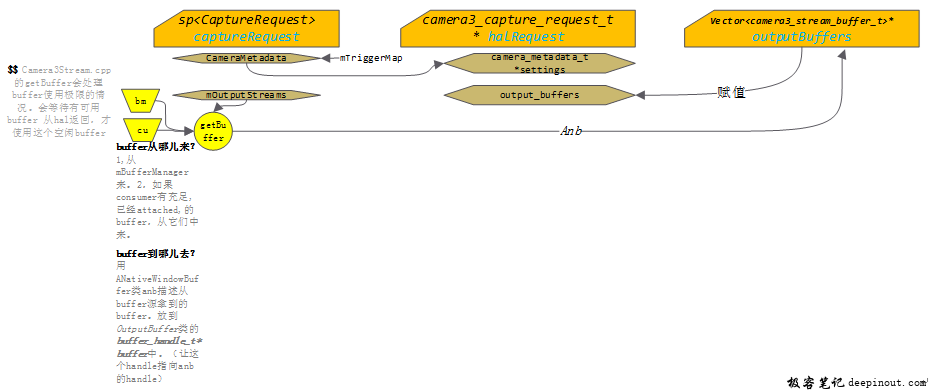

其中构造hal需要的hal_request时,包含如下:

- Metadata是依据mTriggerMap填充

- 我们关心的buffer是从

Camera3BufferManager的getBufferForStream拿到的。

下图描述了hal_request成员来源。

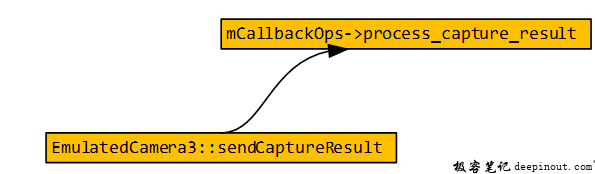

- Hal向framework怎么发送?

这里边主要依赖到framework的Camera3Device类向hal层注册的回调函数。在capture结束时候,调用这个回调函数返回给framework使用。 这里边又有几个小问题:

- hal给fw的数据结构是什么?

- 如何把

Camera3Device回调注册到hal的? - Hal在什么时候有capture的数据结果?

- Hal如何填充capture数据结果?

hal给fw的数据结构是什么?

首先,Hal给framework的数据结构如下:

typedef struct camera3_capture_result {

//1,递增值

//2,由framework设置.

//3,用于标识异步通知,camera3_callback_ops_t.notify().

uint32_t frame_number;

//1,capture的metadata结果.

//2,含有caputre 参数,post-processing状态,3a状态以及统计信息.

//3,用特定frame_number去调用process_capture_result()得到这个metadata.

const camera_metadata_t *result;

//1,输出buffer的个数

uint32_t num_output_buffers;

//1,含有output buffer的handle.

//2,在hal调用process_capture_result函数时,该buf"有可能"没有填充.

//3,所谓填充点,在于"sync fences"

const camera3_stream_buffer_t *output_buffers;

//1,输入buffer的handle

const camera3_stream_buffer_t *input_buffer;

//1,hal首先要设置metadata的android.request.partialResultCount,它描述需要多少个partial result组成一帧.

//2,如果不用partial_resutl的话,hal不要设置这个metadata.

uint32_t partial_result;

} camera3_capture_result_t;如何把Camera3Device回调注册到hal的?

我们知道,hal层填充好数据后需要返回给framework层。这里边就用到了framework在初始化camera hal时传入的一个Camera3Device类作为回调。

- 首先通过open获得hal中的device结构。

- 然后通过ops的initialize把当前的Camera3Device类传入的hal层。

status_t Camera3Device::initialize(CameraModule *module)

{

ATRACE_CALL();

Mutex::Autolock il(mInterfaceLock);

Mutex::Autolock l(mLock);

/** Open HAL device */

status_t res;

String8 deviceName = String8::format("%d", mId);

camera3_device_t *device;

ATRACE_BEGIN("camera3->open");

res = module->open(deviceName.string(),

reinterpret_cast<hw_device_t**>(&device)); // 这里会返回出hal层的device.以后后边的使用就基于此.

camera_info info;

res = module->getCameraInfo(mId, &info);

/** Initialize device with callback functions */

res = device->ops->initialize(device, this);//这个this尤其重要,它代表的就是Camera3Device类本身.

/** Start up status tracker thread */

mStatusTracker = new StatusTracker(this);

res = mStatusTracker->run(String8::format("C3Dev-%d-Status", mId).string());

/** Register in-flight map to the status tracker */

mInFlightStatusId = mStatusTracker->addComponent();

/** Create buffer manager */

/** 这里创建了Camera3BufferManager.*/

mBufferManager = new Camera3BufferManager();//buffer manager

bool aeLockAvailable = false;

camera_metadata_ro_entry aeLockAvailableEntry;

res = find_camera_metadata_ro_entry(info.static_camera_characteristics,ANDROID_CONTROL_AE_LOCK_AVAILABLE, &aeLockAvailableEntry);

if (res == OK && aeLockAvailableEntry.count > 0) {

aeLockAvailable = (aeLockAvailableEntry.data.u8[0] ==

ANDROID_CONTROL_AE_LOCK_AVAILABLE_TRUE);

}

/** Start up request queue thread */

mRequestThread = new RequestThread(this, mStatusTracker, device, aeLockAvailable);

res = mRequestThread->run(String8::format("C3Dev-%d-ReqQueue", mId).string());

mPreparerThread = new PreparerThread();

/** Everything is good to go */

mDeviceVersion = device->common.version;

mDeviceInfo = info.static_camera_characteristics;

mHal3Device = device;

internalUpdateStatusLocked(STATUS_UNCONFIGURED);

mNextStreamId = 0;

mDummyStreamId = NO_STREAM;

mNeedConfig = true;

mPauseStateNotify = false;

// Measure the clock domain offset between camera and video/hw_composer

camera_metadata_entry timestampSource =

mDeviceInfo.find(ANDROID_SENSOR_INFO_TIMESTAMP_SOURCE);

if (timestampSource.count > 0 && timestampSource.data.u8[0] ==

ANDROID_SENSOR_INFO_TIMESTAMP_SOURCE_REALTIME) {

mTimestampOffset = getMonoToBoottimeOffset();

}

// Will the HAL be sending in early partial result metadata?

if (mDeviceVersion >= CAMERA_DEVICE_API_VERSION_3_2) {

camera_metadata_entry partialResultsCount =

mDeviceInfo.find(ANDROID_REQUEST_PARTIAL_RESULT_COUNT);

if (partialResultsCount.count > 0) {

mNumPartialResults = partialResultsCount.data.i32[0];

mUsePartialResult = (mNumPartialResults > 1);

}

} else {

camera_metadata_entry partialResultsQuirk =

mDeviceInfo.find(ANDROID_QUIRKS_USE_PARTIAL_RESULT);

if (partialResultsQuirk.count > 0 && partialResultsQuirk.data.u8[0] == 1) {

mUsePartialResult = true;

}

}

camera_metadata_entry configs =

mDeviceInfo.find(ANDROID_SCALER_AVAILABLE_STREAM_CONFIGURATIONS);

for (uint32_t i = 0; i < configs.count; i += 4) {

if (configs.data.i32[i] == HAL_PIXEL_FORMAT_IMPLEMENTATION_DEFINED &&

configs.data.i32[i + 3] ==

ANDROID_SCALER_AVAILABLE_STREAM_CONFIGURATIONS_INPUT) {

mSupportedOpaqueInputSizes.add(Size(configs.data.i32[i + 1],

configs.data.i32[i + 2]));

}

}

return OK;

}进一步看看open的操作:通过common.methods的open函数。

camera_module_t HAL_MODULE_INFO_SYM = {

common: {

tag: HARDWARE_MODULE_TAG,

module_api_version: CAMERA_MODULE_API_VERSION_2_3,

hal_api_version: HARDWARE_HAL_API_VERSION,

id: CAMERA_HARDWARE_MODULE_ID,

name: "Emulated Camera Module",

author: "The Android Open Source Project",

methods: &android::EmulatedCameraFactory::mCameraModuleMethods,

dso: NULL,

reserved: {0},

},

get_number_of_cameras: android::EmulatedCameraFactory::get_number_of_cameras,

get_camera_info: android::EmulatedCameraFactory::get_camera_info,

set_callbacks: android::EmulatedCameraFactory::set_callbacks,

get_vendor_tag_ops: android::EmulatedCameraFactory::get_vendor_tag_ops,

open_legacy: android::EmulatedCameraFactory::open_legacy

};

struct hw_module_methods_t EmulatedCameraFactory::mCameraModuleMethods = {

open: EmulatedCameraFactory::device_open

};

//最终:

status_t EmulatedCamera3::connectCamera(hw_device_t** device) {

*device = &common;

}

EmulatedCamera3::EmulatedCamera3(int cameraId,

struct hw_module_t* module):

EmulatedBaseCamera(cameraId,

CAMERA_DEVICE_API_VERSION_3_3,

&common,

module),

mStatus(STATUS_ERROR)

{

common.close = EmulatedCamera3::close;

ops = &sDeviceOps;

mCallbackOps = NULL;

}- 那hal层对initialize的反应如何?

camera3_device_ops_t EmulatedCamera3::sDeviceOps = {

EmulatedCamera3::initialize,

EmulatedCamera3::configure_streams,

/* DEPRECATED: register_stream_buffers */ nullptr,

EmulatedCamera3::construct_default_request_settings,

EmulatedCamera3::process_capture_request,

/* DEPRECATED: get_metadata_vendor_tag_ops */ nullptr,

EmulatedCamera3::dump,

EmulatedCamera3::flush

};在initialize中把framework的Camera3Device类作为mCallbackOps。

int EmulatedCamera3::initialize(const struct camera3_device *d,

const camera3_callback_ops_t *callback_ops) {

EmulatedCamera3* ec = getInstance(d);

return ec->initializeDevice(callback_ops);

}

status_t EmulatedCamera3::initializeDevice(

const camera3_callback_ops *callbackOps) {

...

mCallbackOps = callbackOps;

...

}Hal怎么使用这个回调?

在hal填充好数据后,会调用mCallbackOps来向framework层发送数据。

这里须知mCallbackOps本来就是Camera3Device类。调用它的process_capture_result。

Camera3Device::Camera3Device(int id):

…

{

camera3_callback_ops::process_capture_result = &sProcessCaptureResult;

}这里进一步把它强转成Camera3Device

void Camera3Device::sProcessCaptureResult(const camera3_callback_ops *cb,

const camera3_capture_result *result) {

Camera3Device *d =

const_cast<Camera3Device*>(static_cast<const Camera3Device*>(cb));

d->processCaptureResult(result);

}Hal在什么时候有capture的数据结果

- Hal在什么时候有capture的数据结果?

- Hal如何填充capture数据结果?

在hal层内部以及kernel层,就看各芯片厂家的实现了。除此之外,Google实现的goldenflash里边也有EmulatedCamera。它的结构也很耐人寻味。我们姑且研究一下goldenfish的结构。

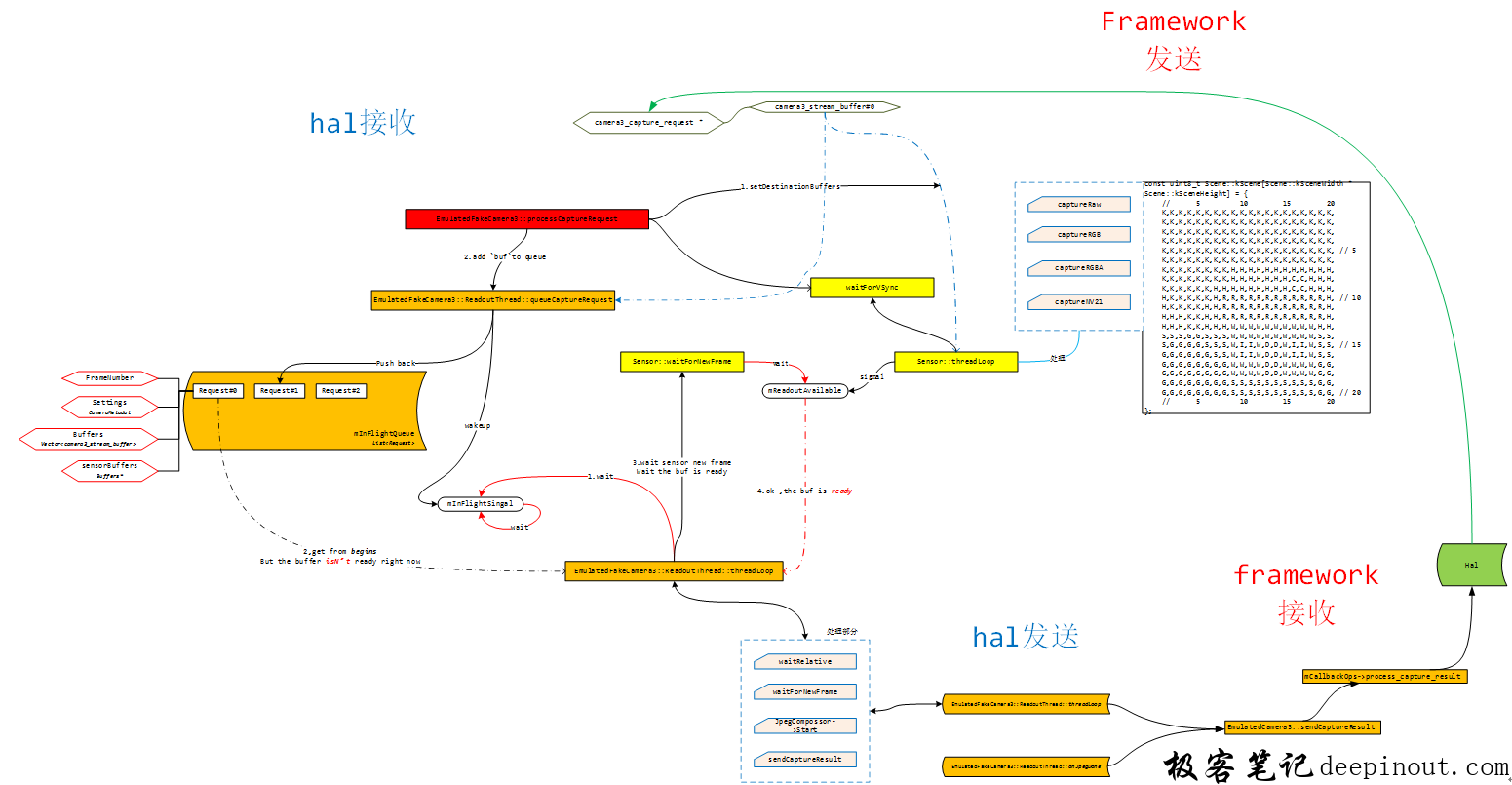

下图描述了framework层和hal层在buf上的交互。

上面介绍到,framework层经过Camera3BufferManager的getBufferForStream来拿到gralloc的buffer。然后把这个buf组成hal_request,再对每一个request调用hal的processCaptureResult函数。至此,buf从framework传入hal层。

Hal从kernel。这里是sensor模块,拿到数据,并填充到buf中。在经过mCallbackOps描述的Camera3Device类的process_capture_result把buf从hal层传回framework。此时buf内含有sensor模块的图像数据。

在hal层内部组织了多个list。其他芯片厂家的可能会更多的list类似的结构。但是一般都会有两个结构,一个是纯粹放Request,这些request内部含有吗描述拍照的参数配置cameraMetadata类,另一个是buf的queue。因为frameNumber存在它们可以一一对应,不会混淆。

在EmulatedCamera中mInFlightQueue描述传入的Request队列,在EmulatedCamera中含有一个ReadoutThread,它是一个loop。

首先,会等待mInFlightQueue,如果它有新req填充进去。ReadoutThread会从等待中推出,立即从mInFlightQueue的头部,取出一个request。虽然这个request中含有buf地址,但是此时buf还没有被填充上数据ReadoutThread还会等待Sensor模块对buf的填充。

Sensor模块会从一个基本的pattern中根据raw、rgb、rgbA以及yuv420等格式填充图像,它其实还有一些人为创造的noise,蛮有意思的。

接下来,我们就详细说一说EmualtedCamera的实现。

首先是ReadoutThread和前面说的流程类似,这里边会多处理一个mJpeg的模式,其实现在ISP芯片中也都有hw的jpeg压缩模块。

bool EmulatedFakeCamera3::ReadoutThread::threadLoop() {

if (mCurrentRequest.settings.isEmpty()) {

Mutex::Autolock l(mLock);

if (mInFlightQueue.empty()) {

res = mInFlightSignal.waitRelative(mLock, kWaitPerLoop);//睡眠等待 mInFlightQueue

}

mCurrentRequest.frameNumber = mInFlightQueue.begin()->frameNumber;

mCurrentRequest.settings.acquire(mInFlightQueue.begin()->settings);

mCurrentRequest.buffers = mInFlightQueue.begin()->buffers;

mCurrentRequest.sensorBuffers = mInFlightQueue.begin()->sensorBuffers;

mInFlightQueue.erase(mInFlightQueue.begin());

mInFlightSignal.signal();

mThreadActive = true;

}

nsecs_t captureTime;

bool gotFrame =

mParent->mSensor->waitForNewFrame(kWaitPerLoop, &captureTime);// 等待sensor产生new frame

// Check if we need to JPEG encode a buffer, and send it for async

// compression if so. Otherwise prepare the buffer for return.

bool needJpeg = false;

// 在当前request的所有buffer中,对于满足PIXEL BLOB,并且不是depth buf时.在Jpeg idle时候,利用mJpegCompressor来把sensor传过来的数据压缩成jpeg格式.

HalBufferVector::iterator buf = mCurrentRequest.buffers->begin();

while(buf != mCurrentRequest.buffers->end()) {

bool goodBuffer = true;

if ( buf->stream->format ==

HAL_PIXEL_FORMAT_BLOB && buf->stream->data_space != HAL_DATASPACE_DEPTH) {

Mutex::Autolock jl(mJpegLock);

if (mJpegWaiting) {

// This shouldn't happen, because processCaptureRequest should

// be stalling until JPEG compressor is free.

ALOGE("%s: Already processing a JPEG!", __FUNCTION__);

goodBuffer = false;

}

if (goodBuffer) {

// Compressor takes ownership of sensorBuffers here

res = mParent->mJpegCompressor->start(mCurrentRequest.sensorBuffers,

this);

goodBuffer = (res == OK);

}

if (goodBuffer) {

needJpeg = true;

mJpegHalBuffer = *buf;

mJpegFrameNumber = mCurrentRequest.frameNumber;

mJpegWaiting = true;

mCurrentRequest.sensorBuffers = NULL;

buf = mCurrentRequest.buffers->erase(buf);

continue;

}

}

GraphicBufferMapper::get().unlock(*(buf->buffer));

buf->status = goodBuffer ? CAMERA3_BUFFER_STATUS_OK :

CAMERA3_BUFFER_STATUS_ERROR;

buf->acquire_fence = -1;

buf->release_fence = -1;

++buf;

} // end while

// Construct result for all completed buffers and results

result.frame_number = mCurrentRequest.frameNumber;

result.result = mCurrentRequest.settings.getAndLock();

result.num_output_buffers = mCurrentRequest.buffers->size();

result.output_buffers = mCurrentRequest.buffers->array();

result.input_buffer = nullptr;

result.partial_result = 1;

mParent->sendCaptureResult(&result);// 发送给hal层

// Clean up

mCurrentRequest.settings.unlock(result.result);

delete mCurrentRequest.buffers;

mCurrentRequest.buffers = NULL;

mCurrentRequest.settings.clear();

return true;

}接下来是Sensor的threadLoop

bool Sensor::threadLoop() {

/**

* Stage 1: Read in latest control parameters

*/

//ReadoutThread会配置这些参数.

uint64_t exposureDuration;

uint64_t frameDuration;

uint32_t gain;

Buffers *nextBuffers;

uint32_t frameNumber;

SensorListener *listener = NULL;

{

Mutex::Autolock lock(mControlMutex);

exposureDuration = mExposureTime;

frameDuration = mFrameDuration;

gain = mGainFactor;

nextBuffers = mNextBuffers;//会指向framework层传来的buffer.

frameNumber = mFrameNumber;

listener = mListener;

// Don't reuse a buffer set

mNextBuffers = NULL;

// Signal VSync for start of readout

ALOGVV("Sensor VSync");

mGotVSync = true;

mVSync.signal();

}

/**

* Stage 3: Read out latest captured image

*/

Buffers *capturedBuffers = NULL;

nsecs_t captureTime = 0;

nsecs_t startRealTime = systemTime();

// Stagefright cares about system time for timestamps, so base simulated

// time on that.

nsecs_t simulatedTime = startRealTime;

nsecs_t frameEndRealTime = startRealTime + frameDuration;

nsecs_t frameReadoutEndRealTime = startRealTime +

kRowReadoutTime * kResolution[1];

if (mNextCapturedBuffers != NULL) {

ALOGVV("Sensor starting readout");

// Pretend we're doing readout now; will signal once enough time has elapsed

capturedBuffers = mNextCapturedBuffers;

captureTime = mNextCaptureTime;

}

simulatedTime += kRowReadoutTime + kMinVerticalBlank;

// TODO: Move this signal to another thread to simulate readout

// time properly

if (capturedBuffers != NULL) {

ALOGVV("Sensor readout complete");

Mutex::Autolock lock(mReadoutMutex);

if (mCapturedBuffers != NULL) {

ALOGV("Waiting for readout thread to catch up!");

mReadoutComplete.wait(mReadoutMutex);

}

mCapturedBuffers = capturedBuffers;

mCaptureTime = captureTime;

mReadoutAvailable.signal();

capturedBuffers = NULL;

}

/**

* Stage 2: Capture new image

*/

mNextCaptureTime = simulatedTime;

mNextCapturedBuffers = nextBuffers;//指向framework层的buf

if (mNextCapturedBuffers != NULL) {

if (listener != NULL) {

listener->onSensorEvent(frameNumber, SensorListener::EXPOSURE_START,

mNextCaptureTime);

}

ALOGVV("Starting next capture: Exposure: %f ms, gain: %d",

(float)exposureDuration/1e6, gain);

mScene.setExposureDuration((float)exposureDuration/1e9);//设置曝光参数

mScene.calculateScene(mNextCaptureTime);//计算当前图片的场景

// Might be adding more buffers, so size isn't constant

for (size_t i = 0; i < mNextCapturedBuffers->size(); i++) {

const StreamBuffer &b = (*mNextCapturedBuffers)[i];//对于每一个output buffer,都填充

switch(b.format) {

case HAL_PIXEL_FORMAT_RAW16:

captureRaw(b.img, gain, b.stride);

break;

case HAL_PIXEL_FORMAT_RGB_888:

captureRGB(b.img, gain, b.stride);

break;

case HAL_PIXEL_FORMAT_RGBA_8888:

captureRGBA(b.img, gain, b.stride);

break;

case HAL_PIXEL_FORMAT_BLOB:

if (b.dataSpace != HAL_DATASPACE_DEPTH) {

// Add auxillary buffer of the right size

// Assumes only one BLOB (JPEG) buffer in

// mNextCapturedBuffers

StreamBuffer bAux;

bAux.streamId = 0;

bAux.width = b.width;

bAux.height = b.height;

bAux.format = HAL_PIXEL_FORMAT_RGB_888;

bAux.stride = b.width;

bAux.buffer = NULL;

// TODO: Reuse these

bAux.img = new uint8_t[b.width * b.height * 3];

mNextCapturedBuffers->push_back(bAux);

} else {

captureDepthCloud(b.img);

}

break;

case HAL_PIXEL_FORMAT_YCrCb_420_SP:

captureNV21(b.img, gain, b.stride);

break;

case HAL_PIXEL_FORMAT_YV12:

// TODO:

ALOGE("%s: Format %x is TODO", __FUNCTION__, b.format);

break;

case HAL_PIXEL_FORMAT_Y16:

captureDepth(b.img, gain, b.stride);

break;

default:

ALOGE("%s: Unknown format %x, no output", __FUNCTION__,

b.format);

break;

}

}

}

ALOGVV("Sensor vertical blanking interval");

nsecs_t workDoneRealTime = systemTime();

const nsecs_t timeAccuracy = 2e6; // 2 ms of imprecision is ok

if (workDoneRealTime < frameEndRealTime - timeAccuracy) {

timespec t;

t.tv_sec = (frameEndRealTime - workDoneRealTime) / 1000000000L;

t.tv_nsec = (frameEndRealTime - workDoneRealTime) % 1000000000L;

int ret;

do {

ret = nanosleep(&t, &t);

} while (ret != 0);

}

nsecs_t endRealTime = systemTime();

ALOGVV("Frame cycle took %d ms, target %d ms",

(int)((endRealTime - startRealTime)/1000000),

(int)(frameDuration / 1000000));

return true;

};接着是Sensor模块的处理:

void Sensor::captureRaw(uint8_t *img, uint32_t gain, uint32_t stride) {

float totalGain = gain/100.0 * kBaseGainFactor;

float noiseVarGain = totalGain * totalGain;

float readNoiseVar = kReadNoiseVarBeforeGain * noiseVarGain

+ kReadNoiseVarAfterGain;

//这里是自己生成一幅图给framework层.

int bayerSelect[4] = {Scene::R, Scene::Gr, Scene::Gb, Scene::B}; // RGGB

mScene.setReadoutPixel(0,0);

for (unsigned int y = 0; y < kResolution[1]; y++ ) { // 480 height

int *bayerRow = bayerSelect + (y & 0x1) * 2;//R Gb R Gb

uint16_t *px = (uint16_t*)img + y * stride;// stride等于camera request的stream中的width

for (unsigned int x = 0; x < kResolution[0]; x++) { // 640 width,是个VGA的图

uint32_t electronCount;

electronCount = mScene.getPixelElectrons()[bayerRow[x & 0x1]];//R Gb

// TODO: Better pixel saturation curve?

electronCount = (electronCount < kSaturationElectrons) ?

electronCount : kSaturationElectrons;

// TODO: Better A/D saturation curve?

uint16_t rawCount = electronCount * totalGain;

rawCount = (rawCount < kMaxRawValue) ? rawCount : kMaxRawValue;

// Calculate noise value

// TODO: Use more-correct Gaussian instead of uniform noise

float photonNoiseVar = electronCount * noiseVarGain;

float noiseStddev = sqrtf_approx(readNoiseVar + photonNoiseVar);

// Scaled to roughly match gaussian/uniform noise stddev

float noiseSample = std::rand() * (2.5 / (1.0 + RAND_MAX)) - 1.25;

rawCount += kBlackLevel;

rawCount += noiseStddev * noiseSample;

*px++ = rawCount;

}

// TODO: Handle this better

//simulatedTime += kRowReadoutTime;

}

ALOGVV("Raw sensor image captured");

}hal层处理framework层传过来的buf。通过processCaptureRequest函数来接受framework层传过来的request。

status_t EmulatedFakeCamera3::processCaptureRequest(

camera3_capture_request *request) {

Mutex::Autolock l(mLock);

// request和buf不变的是frame number.

uint32_t frameNumber = request->frame_number;

ssize_t idx;

const camera3_stream_buffer_t *b;

if (request->input_buffer != NULL) {

idx = -1;

b = request->input_buffer;

} else {

idx = 0;

b = request->output_buffers;

}

CameraMetadata settings;

// 处理3A的数据

res = process3A(settings);

if (res != OK) {

return res;

}

/**

* Get ready for sensor config

*/

nsecs_t exposureTime;

nsecs_t frameDuration;

uint32_t sensitivity;

bool needJpeg = false;

camera_metadata_entry_t entry;

entry = settings.find(ANDROID_SENSOR_EXPOSURE_TIME);

exposureTime = (entry.count > 0) ? entry.data.i64[0] : Sensor::kExposureTimeRange[0];

entry = settings.find(ANDROID_SENSOR_FRAME_DURATION);

frameDuration = (entry.count > 0)? entry.data.i64[0] : Sensor::kFrameDurationRange[0];

entry = settings.find(ANDROID_SENSOR_SENSITIVITY);

sensitivity = (entry.count > 0) ? entry.data.i32[0] : Sensor::kSensitivityRange[0];

if (exposureTime > frameDuration) {

frameDuration = exposureTime + Sensor::kMinVerticalBlank;

settings.update(ANDROID_SENSOR_FRAME_DURATION, &frameDuration, 1);

}

Buffers *sensorBuffers = new Buffers();

HalBufferVector *buffers = new HalBufferVector();

sensorBuffers->setCapacity(request->num_output_buffers);

buffers->setCapacity(request->num_output_buffers);

// Process all the buffers we got for output, constructing internal buffer

// structures for them, and lock them for writing.

for (size_t i = 0; i < request->num_output_buffers; i++) {

const camera3_stream_buffer &srcBuf = request->output_buffers[i];//这里是framework给的buf

const cb_handle_t *privBuffer =

static_cast<const cb_handle_t*>(*srcBuf.buffer);

StreamBuffer destBuf;

destBuf.streamId = kGenericStreamId;

destBuf.width = srcBuf.stream->width;

destBuf.height = srcBuf.stream->height;

destBuf.format = privBuffer->format; // Use real private format

destBuf.stride = srcBuf.stream->width; // TODO: query from gralloc

destBuf.dataSpace = srcBuf.stream->data_space;

destBuf.buffer = srcBuf.buffer;

if (destBuf.format == HAL_PIXEL_FORMAT_BLOB) {

needJpeg = true;// BLOB类型需要Jpeg

}

// Wait on fence

// 等待fence可写

sp<Fence> bufferAcquireFence = new Fence(srcBuf.acquire_fence);

res = bufferAcquireFence->wait(kFenceTimeoutMs);

if (res == OK) {

// Lock buffer for writing

const Rect rect(destBuf.width, destBuf.height);

if (srcBuf.stream->format == HAL_PIXEL_FORMAT_YCbCr_420_888) {

if (privBuffer->format == HAL_PIXEL_FORMAT_YCrCb_420_SP) {

android_ycbcr ycbcr = android_ycbcr();

// yuv420的需要lock住write操作

res = GraphicBufferMapper::get().lockYCbCr(

*(destBuf.buffer),

GRALLOC_USAGE_HW_CAMERA_WRITE, rect,

&ycbcr);

// This is only valid because we know that emulator's

// YCbCr_420_888 is really contiguous NV21 under the hood

destBuf.img = static_cast<uint8_t*>(ycbcr.y);

} else {

ALOGE("Unexpected private format for flexible YUV: 0x%x",

privBuffer->format);

res = INVALID_OPERATION;

}

} else {

res = GraphicBufferMapper::get().lock(*(destBuf.buffer),

GRALLOC_USAGE_HW_CAMERA_WRITE, rect,

(void**)&(destBuf.img));

}

}

sensorBuffers->push_back(destBuf);// 把framework的buf存入sensorBuffer中

buffers->push_back(srcBuf);

}

/**

* Wait for JPEG compressor to not be busy, if needed

*/

if (needJpeg) {

bool ready = mJpegCompressor->waitForDone(kFenceTimeoutMs);

if (!ready) {

ALOGE("%s: Timeout waiting for JPEG compression to complete!",

__FUNCTION__);

return NO_INIT;

}

}

/**

* Wait until the in-flight queue has room

*/

res = mReadoutThread->waitForReadout();

if (res != OK) {

ALOGE("%s: Timeout waiting for previous requests to complete!",

__FUNCTION__);

return NO_INIT;

}

/**

* Wait until sensor's ready. This waits for lengthy amounts of time with

* mLock held, but the interface spec is that no other calls may by done to

* the HAL by the framework while process_capture_request is happening.

*/

int syncTimeoutCount = 0;

while(!mSensor->waitForVSync(kSyncWaitTimeout)) {

if (mStatus == STATUS_ERROR) {

return NO_INIT;

}

if (syncTimeoutCount == kMaxSyncTimeoutCount) {

ALOGE("%s: Request %d: Sensor sync timed out after %" PRId64 " ms",

__FUNCTION__, frameNumber,

kSyncWaitTimeout * kMaxSyncTimeoutCount / 1000000);

return NO_INIT;

}

syncTimeoutCount++;

}

/**

* Configure sensor and queue up the request to the readout thread

*/

mSensor->setExposureTime(exposureTime);

mSensor->setFrameDuration(frameDuration);

mSensor->setSensitivity(sensitivity);

mSensor->setDestinationBuffers(sensorBuffers); // 把framework传来的buf提交给sensor模块处理

mSensor->setFrameNumber(request->frame_number);

ReadoutThread::Request r;

r.frameNumber = request->frame_number;

r.settings = settings;

r.sensorBuffers = sensorBuffers;

r.buffers = buffers;

mReadoutThread->queueCaptureRequest(r);

ALOGVV("%s: Queued frame %d", __FUNCTION__, request->frame_number);

// Cache the settings for next time

mPrevSettings.acquire(settings);

return OK;

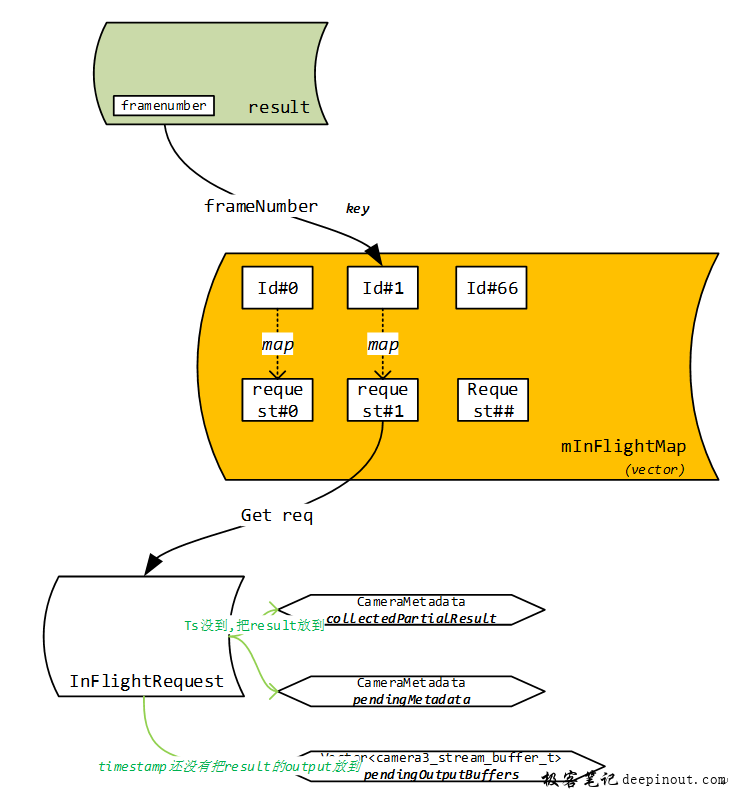

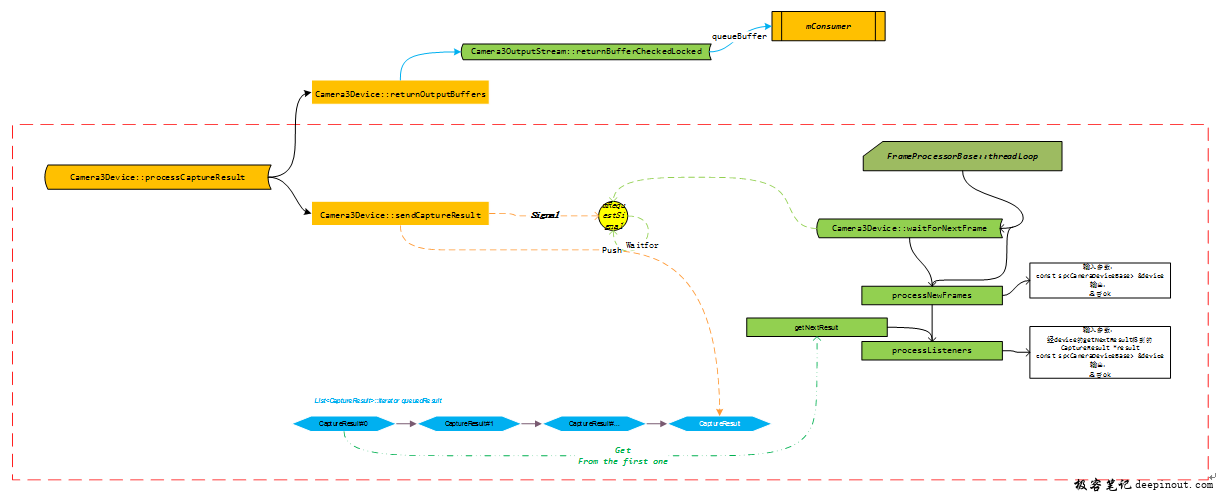

}最后是,hal层向framework层传输buf以及framework层,特别是Camera3Device类对buf的处理,如下图Camera3Device中也会有描述request的mInFlightMap结构以及inFlightRequest结构,前者是个map,描述了frame number和request id的对应关系,后者保存有request。

我们之前就说过,request和buf其实是若即若离的,buf无非就是某块内存地址,request无非就是保存有本次拍照的配置参数。它的联系就是frame number,这里Camera3Device中也依据hal层返回的result描述的frame number拿到mInFlightMap中对应的request id。这里边就是说,hal层并不返回request了。

我们之前说过,当framework拿到hal层的result时,并不表示此时的buf可用,还要看是否有sensor的timeStamp(当然在EmulatedCamera中是肯定有的)。如果sensor timestamp还没有到,这里需要

- 把包含有buf的result放到pendingOutputBuffer结构中。

- 把对应的还有metadata放到pendingMetadata中。

如果拿到了timeStamp并且此时也不是partial result,或者说是最后一个partial result就可以把result的buf(含数据)还给stream,并且把request(含配置)发送给等待的consumer了。

- 数据部分流向mConsumer,经过其queueBuffer。

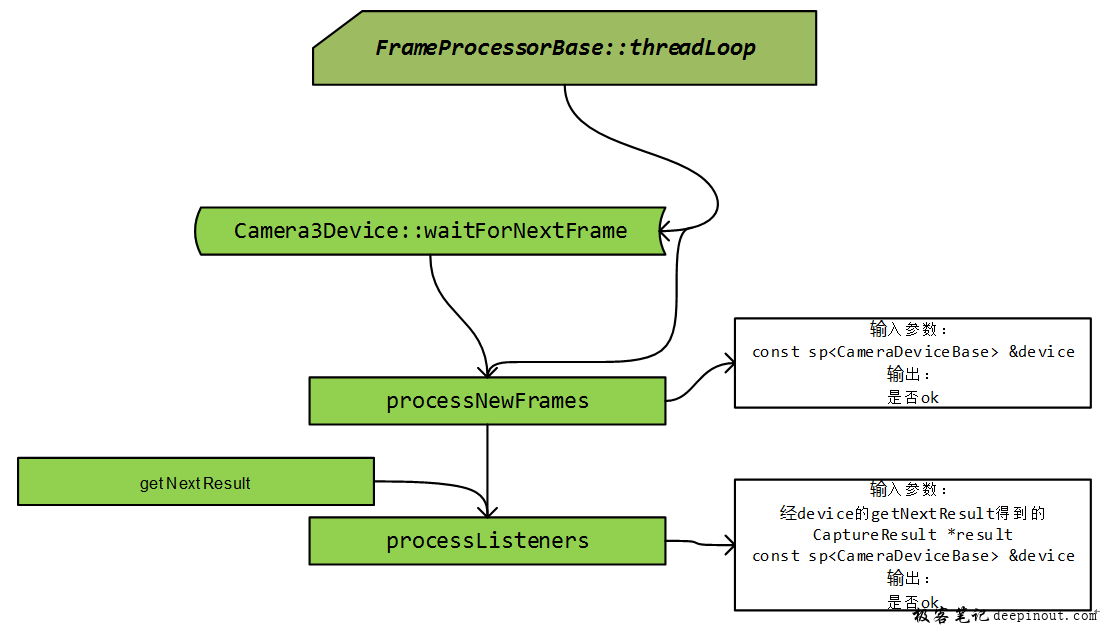

配置部分流向FrameProcessBase的threadLoop,经其listener流向对此request感兴趣的注册的listener,含有metadata的request在返回到framework层的处理如下图

下面这段代码实现上面的逻辑

在framework拿到含有buf的result后,根据此时buffer的sensor timeStamp是否存在,把result的buf和request分别放到不同处理。

void Camera3Device::processCaptureResult(const camera3_capture_result *result) {

uint32_t frameNumber = result->frame_number;

bool isPartialResult = false;

CameraMetadata collectedPartialResult;

CaptureResultExtras resultExtras;

bool hasInputBufferInRequest = false;

// Get shutter timestamp and resultExtras from list of in-flight requests,

// where it was added by the shutter notification for this frame. If the

// shutter timestamp isn't received yet, append the output buffers to the

// in-flight request and they will be returned when the shutter timestamp

// arrives. Update the in-flight status and remove the in-flight entry if

// all result data and shutter timestamp have been received.

nsecs_t shutterTimestamp = 0;

{

Mutex::Autolock l(mInFlightLock);

// 第一,从结果中拿到framenumber,在根据frameNumber在mInflightMap中扎到 request。

ssize_t idx = mInFlightMap.indexOfKey(frameNumber);

InFlightRequest &request = mInFlightMap.editValueAt(idx);

if (result->partial_result != 0)

request.resultExtras.partialResultCount = result->partial_result;

// Check if this result carries only partial metadata

if (mUsePartialResult && result->result != NULL) {

// 第二,本次result是“部分的”,把本次result添加到collectedPartialResult中。

if (mDeviceVersion >= CAMERA_DEVICE_API_VERSION_3_2) {

if (result->partial_result > mNumPartialResults || result->partial_result < 1) {

SET_ERR("Result is malformed for frame %d: partial_result %u must be in"

" the range of [1, %d] when metadata is included in the result",

frameNumber, result->partial_result, mNumPartialResults);

return;

}

isPartialResult = (result->partial_result < mNumPartialResults);

if (isPartialResult) {

request.collectedPartialResult.append(result->result);

}

} else {

}

if (isPartialResult) {

// Send partial capture result

sendPartialCaptureResult(result->result, request.resultExtras, frameNumber,

request.aeTriggerCancelOverride);

}

}

shutterTimestamp = request.shutterTimestamp;

hasInputBufferInRequest = request.hasInputBuffer;

// Did we get the (final) result metadata for this capture?

if (result->result != NULL && !isPartialResult) {

if (request.haveResultMetadata) {

SET_ERR("Called multiple times with metadata for frame %d",

frameNumber);

return;

}

if (mUsePartialResult &&

!request.collectedPartialResult.isEmpty()) {

collectedPartialResult.acquire(

request.collectedPartialResult);

}

request.haveResultMetadata = true;

}

uint32_t numBuffersReturned = result->num_output_buffers;

if (result->input_buffer != NULL) {

if (hasInputBufferInRequest) {

numBuffersReturned += 1;

} else {

ALOGW("%s: Input buffer should be NULL if there is no input"

" buffer sent in the request",

__FUNCTION__);

}

}

request.numBuffersLeft -= numBuffersReturned;

if (request.numBuffersLeft < 0) {

SET_ERR("Too many buffers returned for frame %d",

frameNumber);

return;

}

// 第三,拿到time stamp

camera_metadata_ro_entry_t entry;

res = find_camera_metadata_ro_entry(result->result,

ANDROID_SENSOR_TIMESTAMP, &entry);

if (res == OK && entry.count == 1) {

request.sensorTimestamp = entry.data.i64[0];

}

// If shutter event isn't received yet, append the output buffers to

// the in-flight request. Otherwise, return the output buffers to

// streams.

// 如果返回的result的timeStamp还没到,暂时把result放到request的pendingOutputBuffers这个vector上。appendArray需要一个带保存的对象指针,还有带保存的对象个数。

if (shutterTimestamp == 0) {

request.pendingOutputBuffers.appendArray(result->output_buffers,

result->num_output_buffers);

} else {

// 如果timeStamp如期而至,需要把结果中的output_buffers返回给stream。

returnOutputBuffers(result->output_buffers,

result->num_output_buffers, shutterTimestamp);

}

// 特别地,对于非“部分”或者“部分的最后一个”,并且拿到了timeStamp,此时要把结果发送出去。

if (result->result != NULL && !isPartialResult) {

if (shutterTimestamp == 0) {

request.pendingMetadata = result->result;

request.collectedPartialResult = collectedPartialResult;

} else {

CameraMetadata metadata;

metadata = result->result;

// 对于拿到timeStamp并且不是partial的,需要把这个result发送给等待的user

sendCaptureResult(metadata, request.resultExtras,

collectedPartialResult, frameNumber, hasInputBufferInRequest,

request.aeTriggerCancelOverride);

}

}

removeInFlightRequestIfReadyLocked(idx);

} // scope for mInFlightLock

if (result->input_buffer != NULL) {

if (hasInputBufferInRequest) {

Camera3Stream *stream =

Camera3Stream::cast(result->input_buffer->stream);

res = stream->returnInputBuffer(*(result->input_buffer));

} else {

}

}

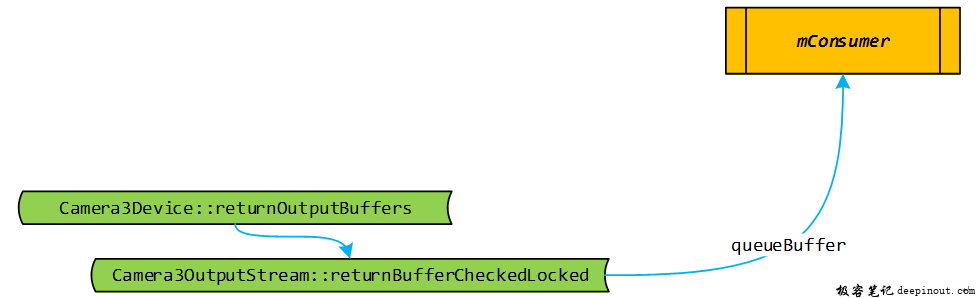

}ReturnOutputBuffer会把buffer返回给consumer。

- 这里可能会有一个疑问,何为

consumer? - Consumer的queue又有如何操作?

给consumer。

void Camera3Device::returnOutputBuffers(

const camera3_stream_buffer_t *outputBuffers, size_t numBuffers,

nsecs_t timestamp) {

for (size_t i = 0; i < numBuffers; i++)

{

Camera3Stream *stream = Camera3Stream::cast(outputBuffers[i].stream);

status_t res = stream->returnBuffer(outputBuffers[i], timestamp);

}

}

returnBuffer ---> returnBufferCheckedLocked

status_t Camera3OutputStream::returnBufferCheckedLocked(

const camera3_stream_buffer &buffer,

nsecs_t timestamp,

bool output,

/*out*/

sp<Fence> *releaseFenceOut) {

sp<Fence> releaseFence = new Fence(buffer.release_fence);

sp<ANativeWindow> currentConsumer = mConsumer;

/**

* Return buffer back to ANativeWindow

*/

if (buffer.status == CAMERA3_BUFFER_STATUS_ERROR) {

} else {

/* Certain consumers (such as AudioSource or HardwareComposer) use

* MONOTONIC time, causing time misalignment if camera timestamp is

* in BOOTTIME. Do the conversion if necessary. */

// audioSource和HardwardComposer这两种comsumer用的MONOTONIC时间,camera用的BOOTTIME时间。必要时需要转换

res = native_window_set_buffers_timestamp(mConsumer.get(),

mUseMonoTimestamp ? timestamp - mTimestampOffset : timestamp);

res = currentConsumer->queueBuffer(currentConsumer.get(),

container_of(buffer.buffer, ANativeWindowBuffer, handle),

anwReleaseFence);

}

*releaseFenceOut = releaseFence;

return res;

}问题1:这里可能会有一个疑问,何为consumer?

这里的consumer是CameraDeviceClient::createStream函数创建stream中构建的一个Surface类。

binder::Status CameraDeviceClient::createStream(

const hardware::camera2::params::OutputConfiguration &outputConfiguration,

/*out*/

int32_t* newStreamId) {

// 是个binder.

sp<IGraphicBufferProducer> bufferProducer = outputConfiguration.getGraphicBufferProducer();

sp<IBinder> binder = IInterface::asBinder(bufferProducer);

// 构建的surface类.

sp<Surface> surface = new Surface(bufferProducer, useAsync);

ANativeWindow *anw = surface.get();

err = mDevice->createStream(surface, width, height, format, dataSpace,

static_cast<camera3_stream_rotation_t>(outputConfiguration.getRotation()),

&streamId, outputConfiguration.getSurfaceSetID());

}看一看这个createStream,是Camera3OutputStream类,根据格式,用途等信息。

status_t Camera3Device::createStream(sp<Surface> consumer,uint32_t width, uint32_t height, int format,android_dataspace dataSpace,camera3_stream_rotation_t rotation, int *id, int streamSetId, uint32_t consumerUsage) {

newStream = new Camera3OutputStream(mNextStreamId, consumer,width, height, blobBufferSize, format, dataSpace, rotation,mTimestampOffset, streamSetId);

}问题2:Consumer的queue又有如何操作?

当hal层传过来buf时,我们把buf return给关心的单元,进而经Surface类的queueBuffer实现,这里我们要搞清楚几个问题。

- 把buffer queue到什么上?

如下代码揭示了,当前queuebuffer会把buffer queue到GraphicBufferProducer的mActiveBuffer上,并广播通知感兴趣者有新buffer到来。

int Surface::queueBuffer(android_native_buffer_t* buffer, int fenceFd) {

int i = getSlotFromBufferLocked(buffer);//从mSlots中找到buffer handle一样的那个slot的id.找不到就报错返回.

// 对于共享buf.无需多次queue,已经queue之后,其他的buf都是一个buf.

if (mSharedBufferSlot == i && mSharedBufferHasBeenQueued) {

return OK;

}

// Make sure the crop rectangle is entirely inside the buffer.

Rect crop(Rect::EMPTY_RECT);

// buffer的宽高扣出一个矩形,并组成input.

mCrop.intersect(Rect(buffer->width, buffer->height), &crop);

sp<Fence> fence(fenceFd >= 0 ? new Fence(fenceFd) : Fence::NO_FENCE);

IGraphicBufferProducer::QueueBufferOutput output;

IGraphicBufferProducer::QueueBufferInput input(timestamp, isAutoTimestamp,

mDataSpace, crop, mScalingMode, mTransform ^ mStickyTransform,

fence, mStickyTransform);

if (mConnectedToCpu || mDirtyRegion.bounds() == Rect::INVALID_RECT) {

input.setSurfaceDamage(Region::INVALID_REGION);

} else {

// openGl ES的坐标和系统buf的坐标有差异,openGlES是左下角为开始.系统用的左上角作为开始.我们这里做一个保持x坐标,颠倒y坐标,bottom-up.

// 另外,如果openGlES中根据SurfaceFlinger的需求做过rotation.这里在返回给系统的时候需要再次做一个opposite的rotation.

int width = buffer->width;

int height = buffer->height;

bool rotated90 = (mTransform ^ mStickyTransform) &

NATIVE_WINDOW_TRANSFORM_ROT_90;

if (rotated90) {

std::swap(width, height);

}

Region flippedRegion;

for (auto rect : mDirtyRegion) {

int left = rect.left;

int right = rect.right;

int top = height - rect.bottom; // Flip from OpenGL convention

int bottom = height - rect.top; // Flip from OpenGL convention

switch (mTransform ^ mStickyTransform) {

case NATIVE_WINDOW_TRANSFORM_ROT_90: {

// Rotate 270 degrees

Rect flippedRect{top, width - right, bottom, width - left};

flippedRegion.orSelf(flippedRect);

break;

}

case NATIVE_WINDOW_TRANSFORM_ROT_180: {

// Rotate 180 degrees

Rect flippedRect{width - right, height - bottom,

width - left, height - top};

flippedRegion.orSelf(flippedRect);

break;

}

case NATIVE_WINDOW_TRANSFORM_ROT_270: {

// Rotate 90 degrees

Rect flippedRect{height - bottom, left,

height - top, right};

flippedRegion.orSelf(flippedRect);

break;

}

default: {

Rect flippedRect{left, top, right, bottom};

flippedRegion.orSelf(flippedRect);

break;

}

}

}

input.setSurfaceDamage(flippedRegion);

}

nsecs_t now = systemTime();

// 把buffer queue到GraphicBufferProducer中.应该是它的mActiveBuffer中.

status_t err = mGraphicBufferProducer->queueBuffer(i, input, &output);

// 有新的buffer了,需要对关心者广播一下.以便它们及时使用

mQueueBufferCondition.broadcast();

return err;

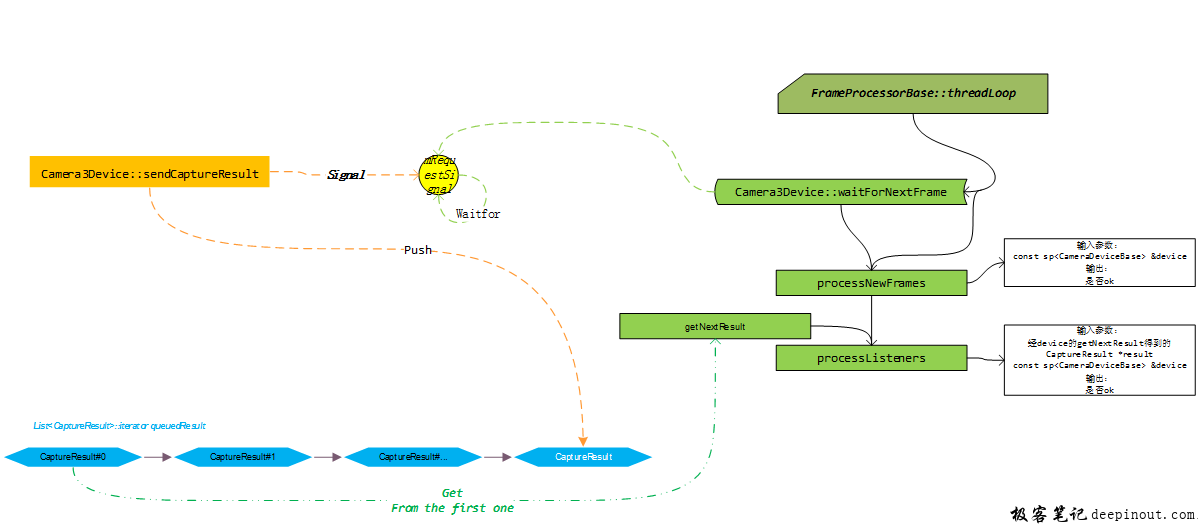

}- Buf的去向解释清楚了,还有一个是对应的buffer的request。这个request里含有本次请求的信息,比如camera Metadata。

如下图所示,我们即将解释request的去向,主要涉及:

- 一个queueResult的list。

- 一个FrameProcessorBase的threadLoop,来等待新的frame结果,等到后主要是调用“感兴趣者”注册的listener。

void Camera3Device::sendCaptureResult(CameraMetadata &pendingMetadata,

CaptureResultExtras &resultExtras,

CameraMetadata &collectedPartialResult,

uint32_t frameNumber,

bool reprocess,

const AeTriggerCancelOverride_t &aeTriggerCancelOverride) {

insertResultLocked(&captureResult, frameNumber, aeTriggerCancelOverride);

}这里就涉及到把result insert到resultQueue中了。 然后再触发result的等待者。

void Camera3Device::insertResultLocked(CaptureResult *result, uint32_t frameNumber,

const AeTriggerCancelOverride_t &aeTriggerCancelOverride) {

if (result->mMetadata.update(ANDROID_REQUEST_FRAME_COUNT,

(int32_t*)&frameNumber, 1) != OK) {// 更新 camera metadata

}

if (result->mMetadata.update(ANDROID_REQUEST_ID, &result->mResultExtras.requestId, 1) != OK) {

}

overrideResultForPrecaptureCancel(&result->mMetadata, aeTriggerCancelOverride);

// Valid result, insert into queue

List<CaptureResult>::iterator queuedResult =

mResultQueue.insert(mResultQueue.end(), CaptureResult(*result));

mResultSignal.signal();

}进而,FrameProcessor的threaLoop类会从mResultQueue的头部get一个Result,并且result的metadata中也有对应的frame number,这样也可以和之前GraphicBufferQueue中的buffer对应起来。

极客笔记

极客笔记