Python中的分词器

众所周知,互联网上有大量的文本数据可用。但是,我们中的大多数人可能不熟悉处理这些文本数据的方法。此外,我们也知道,在机器学习中,导航我们语言的字母是一个棘手的部分,因为机器可以识别数字,而不是字母。

那么,如何进行文本数据的操作和清理以创建模型呢?为了回答这个问题,让我们探索一些令人惊奇的概念,这些概念位于 自然语言处理(NLP) 的下面。

解决NLP问题是一个分阶段的过程。首先,我们必须在进入建模阶段之前清理结构化文本数据。数据清理中包括一些关键步骤。这些步骤如下:

- 单词分词

- 预测每个标记的词性

- 文本词形还原

- 停用词识别和删除,以及其他很多。

在接下来的教程中,我们将学习一个非常基本的步骤,即 分词 。我们将了解什么是分词,为什么对自然语言处理(NLP)而言是必要的。此外,我们还将发现一些用于执行 Python 中的分词的独特方法。

理解分词

分词 可以将大量的文本划分为称为 标记 的较小片段。这些片段或标记非常有用,可以找到模式,并被视为词干提取和词形还原的基本步骤。分词还支持将敏感数据元素替换为非敏感数据元素。

自然语言处理(NLP) 用于创建文本分类、情感分析、智能聊天机器人、语言翻译等应用。因此,了解文本模式以实现上述目的变得重要。

但是就目前而言,可以将词干提取和词形还原视为使用自然语言处理(NLP)清理文本数据的主要步骤。文本分类或垃圾邮件过滤等任务使用NLP与 深度学习 库一起使用,如 Keras 和 Tensorflow 。

理解分词在NLP中的重要性

为了理解分词的重要性,让我们以英语为例。在理解以下部分时,选择任意句子并谨记。

在处理自然语言之前,我们必须确定构成字符串的单词。因此,分词成为进行自然语言处理(NLP)的最基本的步骤。

这一步骤很重要,因为通过分析文本中的每个单词可以解释文本的实际含义。

现在,让我们以以下字符串为例:

我的名字是Jamie Clark。

在对上述字符串进行分词后,我们将得到如下输出:

[‘我的’, ‘名字’, ‘是’, ‘Jamie’, ‘Clark’]

有多种用途可以执行该操作。我们可以利用标记化形式来:

- 计算文本中单词的总数。

- 计算单词的频率,即特定单词出现的总次数等等。

现在,让我们了解在Python中执行自然语言处理(NLP)中的几种标记化方法。

Python中进行标记化的一些方法

对文本数据进行标记化有多种独特的方法。下面描述了其中一些独特的方法:

使用split()函数进行标记化的方法

split() 函数是一种基本的方法之一,用于将字符串拆分。该函数在通过特定分隔符拆分提供的字符串后返回字符串列表。默认情况下, split() 函数在每个空格处断开字符串。然而,我们可以根据需要指定分隔符。

让我们考虑以下示例:

示例1.1:使用split()函数进行单词标记化

my_text = """Let's play a game, Would You Rather! It's simple, you have to pick one or the other. Let's get started. Would you rather try Vanilla Ice Cream or Chocolate one? Would you rather be a bird or a bat? Would you rather explore space or the ocean? Would you rather live on Mars or on the Moon? Would you rather have many good friends or one very best friend? Isn't it easy though? When we have less choices, it's easier to decide. But what if the options would be complicated? I guess, you pretty much not understand my point, neither did I, at first place and that led me to a Bad Decision."""

print(my_text.split())

输出:

['Let's', 'play', 'a', 'game,', 'Would', 'You', 'Rather!', 'It's', 'simple,', 'you', 'have', 'to', 'pick', 'one', 'or', 'the', 'other.', 'Let's', 'get', 'started.', 'Would', 'you', 'rather', 'try', 'Vanilla', 'Ice', 'Cream', 'or', 'Chocolate', 'one?', 'Would', 'you', 'rather', 'be', 'a', 'bird', 'or', 'a', 'bat?', 'Would', 'you', 'rather', 'explore', 'space', 'or', 'the', 'ocean?', 'Would', 'you', 'rather', 'live', 'on', 'Mars', 'or', 'on', 'the', 'Moon?', 'Would', 'you', 'rather', 'have', 'many', 'good', 'friends', 'or', 'one', 'very', 'best', 'friend?', 'Isn't', 'it', 'easy', 'though?', 'When', 'we', 'have', 'less', 'choices,', 'it's', 'easier', 'to', 'decide.', 'But', 'what', 'if', 'the', 'options', 'would', 'be', 'complicated?', 'I', 'guess,', 'you', 'pretty', 'much', 'not', 'understand', 'my', 'point,', 'neither', 'did', 'I,', 'at', 'first', 'place', 'and', 'that', 'led', 'me', 'to', 'a', 'Bad', 'Decision.']

解释:

在上面的示例中,我们使用了 split() 方法将段落分割成较小的片段,也就是单词。同样地,我们也可以通过将分隔符作为 split() 函数的参数来将段落分割成句子。我们知道,句子通常以 句号 “.” 结尾,这意味着我们可以使用 “.” 作为分隔符来分割字符串。

让我们通过以下示例来说明:

示例1.2:使用 split() 函数进行句子分割

my_text = """Dreams. Desires. Reality. There is a fine line between dream to become a desire and a desire to become a reality but expectations are way far then the reality. Nevertheless, we live in a world of mirrors, where we always want to reflect the best of us. We all see a dream, a dream of no wonder what; a dream that we want to be accomplished no matter how much efforts it needed but we try."""

print(my_text.split('. '))

输出:

['Dreams', 'Desires', 'Reality', 'There is a fine line between dream to become a desire and a desire to become a reality but expectations are way far then the reality', 'Nevertheless, we live in a world of mirrors, where we always want to reflect the best of us', 'We all see a dream, a dream of no wonder what; a dream that we want to be accomplished no matter how much efforts it needed but we try.']

解释:

在上面的示例中,我们使用了 split() 函数和 句号(.) 作为参数来按句号分割段落。使用 split() 函数的一个主要缺点是该函数一次只能接受一个参数。因此,我们只能使用一个分隔符来分割字符串。此外, split() 函数不将标点符号视为单独的片段。

使用正则表达式在Python中进行标记化

在转到下一种方法之前,让我们简要了解正则表达式。正则表达式,也称为RegEx,是一种特殊的字符序列,它允许用户使用该序列作为模式来查找或匹配其他字符串或字符串集合。

要开始使用正则表达式,在Python中提供了一个名为re的库。re库是Python中预安装的库之一。

让我们通过Python中使用RegEx方法进行基于单词和句子的标记化的以下示例来说明。

例2.1:使用RegEx方法在Python中进行单词标记化

import re

my_text = """Joseph Arthur was a young businessman. He was one of the shareholders at Ryan Cloud's Start-Up with James Foster and George Wilson. The Start-Up took its flight in the mid-90s and became one of the biggest firms in the United States of America. The business was expanded in all major sectors of livelihood, starting from Personal Care to Transportation by the end of 2000. Joseph was used to be a good friend of Ryan."""

my_tokens = re.findall

输出:

['Joseph', 'Arthur', 'was', 'a', 'young', 'businessman', 'He', 'was', 'one', 'of', 'the', 'shareholders', 'at', 'Ryan', 'Cloud', 's', 'Start', 'Up', 'with', 'James', 'Foster', 'and', 'George', 'Wilson', 'The', 'Start', 'Up', 'took', 'its', 'flight', 'in', 'the', 'mid', '90s', 'and', 'became', 'one', 'of', 'the', 'biggest', 'firms', 'in', 'the', 'United', 'States', 'of', 'America', 'The', 'business', 'was', 'expanded', 'in', 'all', 'major', 'sectors', 'of', 'livelihood', 'starting', 'from', 'Personal', 'Care', 'to', 'Transportation', 'by', 'the', 'end', 'of', '2000', 'Joseph', 'was', 'used', 'to', 'be', 'a', 'good', 'friend', 'of', 'Ryan']

解释:

在上面的示例中,我们导入了 re 库以使用其中的函数。然后我们使用了 re 库的 findall() 函数。该函数可以帮助用户找到所有与参数中的模式匹配的单词,并将它们存储在列表中。

此外, “\w” 用于表示任何单词字符,包括字母、数字和下划线。 “+”表示任何频率 。因此,我们遵循 [\w’]+ 的模式,以便程序在遇到其他字符之前查找并找到所有的字母数字字符。

现在,让我们来看一下使用正则表达式方法进行句子分词的过程。

示例2.2:使用Python中的正则表达式方法进行句子分词

import re

my_text = """The Advertisement was telecasted nationwide, and the product was sold in around 30 states of America. The product became so successful among the people that the production was increased. Two new plant sites were finalized, and the construction was started. Now, The Cloud Enterprise became one of America's biggest firms and the mass producer in all major sectors, from transportation to personal care. Director of The Cloud Enterprise, Ryan Cloud, was now started getting interviewed over his success stories. Many popular magazines were started publishing Critiques about him."""

my_sentences = re.compile('[.!?] ').split(my_text)

print(my_sentences)

输出:

['The Advertisement was telecasted nationwide, and the product was sold in around 30 states of America', 'The product became so successful among the people that the production was increased', 'Two new plant sites were finalized, and the construction was started', "Now, The Cloud Enterprise became one of America's biggest firms and the mass producer in all major sectors, from transportation to personal care", 'Director of The Cloud Enterprise, Ryan Cloud, was now started getting interviewed over his success stories', 'Many popular magazines were started publishing Critiques about him.']

说明:

在上面的示例中,我们使用了re库的compile()函数,参数为'[.?!]’,并使用了split()方法来分隔指定分隔符的字符串。结果是,程序在遇到这些字符时分割句子。

使用Python中的自然语言处理工具包进行分词

自然语言处理工具包(Natural Language ToolKit) ,也被称为 NLTK ,是一个用Python编写的库。 NLTK 库通常用于符号和统计自然语言处理,并且在处理文本数据时效果很好。

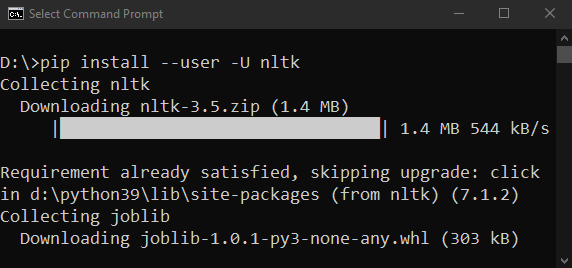

自然语言处理工具包(NLTK) 是一个第三方库,可以使用以下命令在命令行或终端中安装:

$ pip install --user -U nltk

为了验证安装,可以在程序中导入 nltk 库并按如下所示执行:

import nltk

如果程序没有引发错误,则表示库已成功安装。否则,建议重新按上述安装步骤操作,并阅读官方文档以获取更多详细信息。

Natural Language ToolKit(NLTK) 有一个名为 tokenize() 的模块。该模块进一步分为两个子类别: Word Tokenize和Sentence Tokenize

- Word Tokenize: word_tokenize() 方法用于将字符串分割为词语或单词。

- Sentence Tokenize: sent_tokenize() 方法用于将字符串或段落分割为句子。

让我们根据这两种方法考虑一些示例:

示例3.1:使用NLTK库在Python中进行词汇标记化

from nltk.tokenize import word_tokenize

my_text = """The Advertisement was telecasted nationwide, and the product was sold in around 30 states of America. The product became so successful among the people that the production was increased. Two new plant sites were finalized, and the construction was started. Now, The Cloud Enterprise became one of America's biggest firms and the mass producer in all major sectors, from transportation to personal care. Director of The Cloud Enterprise, Ryan Cloud, was now started getting interviewed over his success stories. Many popular magazines were started publishing Critiques about him."""

print(word_tokenize(my_text))

输出:

['The', 'Advertisement', 'was', 'telecasted', 'nationwide', ',', 'and', 'the', 'product', 'was', 'sold', 'in', 'around', '30', 'states', 'of', 'America', '.', 'The', 'product', 'became', 'so', 'successful', 'among', 'the', 'people', 'that', 'the', 'production', 'was', 'increased', '.', 'Two', 'new', 'plant', 'sites', 'were', 'finalized', ',', 'and', 'the', 'construction', 'was', 'started', '.', 'Now', ',', 'The', 'Cloud', 'Enterprise', 'became', 'one', 'of', 'America', "'s", 'biggest', 'firms', 'and', 'the', 'mass', 'producer', 'in', 'all', 'major', 'sectors', ',', 'from', 'transportation', 'to', 'personal', 'care', '.', 'Director', 'of', 'The', 'Cloud', 'Enterprise', ',', 'Ryan', 'Cloud', ',', 'was', 'now', 'started', 'getting', 'interviewed', 'over', 'his', 'success', 'stories', '.', 'Many', 'popular', 'magazines', 'were', 'started', 'publishing', 'Critiques', 'about', 'him', '.']

说明:

在上面的程序中,我们从NLTK库的tokenize模块中导入了word_tokenize()方法。因此,该方法将字符串拆分为不同的标记并将其存储在列表中。最后,我们打印列表。此外,该方法将句子中的句号和其他标点符号作为独立的标记。

示例3.1:使用NLTK库在Python中进行句子分割

from nltk.tokenize import sent_tokenize

my_text = """The Advertisement was telecasted nationwide, and the product was sold in around 30 states of America. The product became so successful among the people that the production was increased. Two new plant sites were finalized, and the construction was started. Now, The Cloud Enterprise became one of America's biggest firms and the mass producer in all major sectors, from transportation to personal care. Director of The Cloud Enterprise, Ryan Cloud, was now started getting interviewed over his success stories. Many popular magazines were started publishing Critiques about him."""

print(sent_tokenize(my_text))

输出:

['The Advertisement was telecasted nationwide, and the product was sold in around 30 states of America.', 'The product became so successful among the people that the production was increased.', 'Two new plant sites were finalized, and the construction was started.', "Now, The Cloud Enterprise became one of America's biggest firms and the mass producer in all major sectors, from transportation to personal care.", 'Director of The Cloud Enterprise, Ryan Cloud, was now started getting interviewed over his success stories.', 'Many popular magazines were started publishing Critiques about him.']

解释:

在上面的程序中,我们从NLTK库的tokenize模块中导入了sent_tokenize()方法。因此,该方法将段落分解为不同的句子并将其存储在一个列表中。最后,我们打印了这个列表。

结论

在上面的教程中,我们介绍了标记化的概念及其在整个自然语言处理(NLP)流水线中的作用。我们还讨论了Python中从特定文本或字符串进行标记化的几种方法,包括词标记化和句子标记化。

极客笔记

极客笔记